The Cluster API Book

Note: Impatient readers may head straight to Quick Start.

Using Cluster API v1alpha1? Check the legacy documentation

What is the Cluster API?

The Cluster API is a Kubernetes project to bring declarative, Kubernetes-style APIs to cluster creation, configuration, and management. It provides optional, additive functionality on top of core Kubernetes to manage the lifecycle of a Kubernetes cluster.

Goals

- To manage the lifecycle (create, scale, upgrade, destroy) of Kubernetes-conformant clusters using a declarative API.

- To work in different environments, both on-premises and in the cloud.

- To define common operations, provide a default implementation, and provide the ability to swap out implementations for alternative ones.

- To reuse and integrate existing ecosystem components rather than duplicating their functionality (e.g. node-problem-detector, cluster autoscaler, SIG-Multi-cluster).

- To provide a transition path for Kubernetes lifecycle products to adopt Cluster API incrementally. Specifically, existing cluster lifecycle management tools should be able to adopt Cluster API in a staged manner, over the course of multiple releases, or even adopting a subset of Cluster API.

Non-goals

- To add these APIs to Kubernetes core (kubernetes/kubernetes).

- This API should live in a namespace outside the core and follow the best practices defined by api-reviewers, but is not subject to core-api constraints.

- To manage the lifecycle of infrastructure unrelated to the running of Kubernetes-conformant clusters.

- To force all Kubernetes lifecycle products (kops, kubespray, GKE, AKS, EKS, IKS etc.) to support or use these APIs.

- To manage non-Cluster API provisioned Kubernetes-conformant clusters.

- To manage a single cluster spanning multiple infrastructure providers.

- To configure a machine at any time other than create or upgrade.

- To duplicate functionality that exists or is coming to other tooling, e.g., updating kubelet configuration (c.f. dynamic kubelet configuration), or updating apiserver, controller-manager, scheduler configuration (c.f. component-config effort) after the cluster is deployed.

Community, discussion, contribution, and support

- Chat with us on the Kubernetes Slack in the #cluster-api channel

- Subscribe to the SIG Cluster Lifecycle Google Group for access to documents and calendars

- Participate in the conversations on Kubernetes Discuss

- Join our Cluster API working group sessions where we share the latest project news, demos, answer questions, and triage issues

- Weekly on Wednesdays @ 10:00 PT on Zoom

- Previous meetings: [ notes | recordings ]

- Provider implementers office hours where you can ask questions related to developing providers for Cluster API

Pull Requests and feedback on issues are very welcome! See the issue tracker if you’re unsure where to start, especially the Good first issue and Help wanted tags, and also feel free to reach out to discuss.

See also: our own contributor guide and the Kubernetes community page.

Code of conduct

Participation in the Kubernetes community is governed by the Kubernetes Code of Conduct.

Quick Start

In this tutorial we’ll cover the basics of how to use Cluster API to create one or more Kubernetes clusters.

Installation

Prerequisites

- Install and setup kubectl in your local environment.

- Install and/or configure a management cluster

Setup Management Cluster

Cluster API requires an existing kubernetes cluster accessible via kubectl, choose one of the options below:

- Kind

kind create cluster --name=clusterapi

kubectl cluster-info --context kind-clusterapi

Because the Docker provider needs to access Docker on the host, a custom kind cluster configuration is required:

cat > kind-cluster-with-extramounts.yaml <<EOF

kind: Cluster

apiVersion: kind.sigs.k8s.io/v1alpha3

nodes:

- role: control-plane

extraMounts:

- hostPath: /var/run/docker.sock

containerPath: /var/run/docker.sock

EOF

kind create cluster --config ./kind-cluster-with-extramounts.yaml --name clusterapi

kubectl cluster-info --context kind-clusterapi

- Existing Management Cluster

For production use-cases a “real” kubernetes cluster should be used with appropriate backup and DR policies and procedures in place.

export KUBECONFIG=<...>

- Pivoting

Pivoting is the process of taking an initial kind cluster to create a new workload cluster, and then converting the workload cluster into a management cluster by migrating the Cluster API CRD’s.

Installation

Using kubectl, create the components on the management cluster:

Install Cluster API

kubectl create -f https://github.com/kubernetes-sigs/cluster-api/releases/download/v0.2.11/cluster-api-components.yaml

Install the Bootstrap Provider

Check the Kubeadm provider releases for an up-to-date components file.

kubectl create -f https://github.com/kubernetes-sigs/cluster-api-bootstrap-provider-kubeadm/releases/download/v0.1.7/bootstrap-components.yaml

Install Infrastructure Provider

Install clusterawsadm

Download the latest binary of clusterawsadm from the AWS provider releases and make sure to place it in your path.

Create the components

Check the AWS provider releases for an up-to-date components file.

# Create the base64 encoded credentials using clusterawsadm.

# This command uses your environment variables and encodes

# them in a value to be stored in a Kubernetes Secret.

export AWS_B64ENCODED_CREDENTIALS=$(clusterawsadm alpha bootstrap encode-aws-credentials)

# Create the components.

curl -L https://github.com/kubernetes-sigs/cluster-api-provider-aws/releases/download/v0.4.10/infrastructure-components.yaml \

| envsubst \

| kubectl create -f -

Check the Azure provider releases for an up-to-date components file.

# Create the base64 encoded credentials

export AZURE_SUBSCRIPTION_ID_B64="$(echo -n "$AZURE_SUBSCRIPTION_ID" | base64 | tr -d '\n')"

export AZURE_TENANT_ID_B64="$(echo -n "$AZURE_TENANT_ID" | base64 | tr -d '\n')"

export AZURE_CLIENT_ID_B64="$(echo -n "$AZURE_CLIENT_ID" | base64 | tr -d '\n')"

export AZURE_CLIENT_SECRET_B64="$(echo -n "$AZURE_CLIENT_SECRET" | base64 | tr -d '\n')"

curl -L https://github.com/kubernetes-sigs/cluster-api-provider-azure/releases/download/v0.3.1/infrastructure-components.yaml \

| envsubst \

| kubectl create -f -

Check the Docker provider releases for an up-to-date components file.

kubectl create -f https://github.com/kubernetes-sigs/cluster-api-provider-docker/releases/download/v0.2.1/provider-components.yaml

It is required to use an official CAPV machine image for your vSphere VM templates. See Uploading CAPV Machine Images for instructions on how to do this.

# Upload vCenter credentials as a Kubernetes secret

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Namespace

metadata:

labels:

control-plane: controller-manager

name: capv-system

---

apiVersion: v1

kind: Secret

metadata:

name: capv-manager-bootstrap-credentials

namespace: capv-system

type: Opaque

data:

username: "<my vCenter username>"

password: "<my vCenter password>"

EOF

$ kubectl create -f https://github.com/kubernetes-sigs/cluster-api-provider-vsphere/releases/download/v0.5.5/infrastructure-components.yaml

Check the vSphere provider releases for an up-to-date components file.

For more information about prerequisites, credentials management, or permissions for vSphere, visit the getting started guide.

Check the OpenStack provider releases for an up-to-date components file.

For more detailed information, e.g. about prerequisites visit the getting started guide.

kubectl create -f https://github.com/kubernetes-sigs/cluster-api-provider-openstack/releases/download/v0.2.0/infrastructure-components.yaml

Usage

Now that we’ve got Cluster API, Bootstrap and Infrastructure resources installed, let’s proceed to create a single node cluster.

For the purpose of this tutorial, we’ll name our cluster capi-quickstart.

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Cluster

metadata:

name: capi-quickstart

spec:

clusterNetwork:

pods:

cidrBlocks: ["192.168.0.0/16"]

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AWSCluster

name: capi-quickstart

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AWSCluster

metadata:

name: capi-quickstart

spec:

# Change this value to the region you want to deploy the cluster in.

region: us-east-1

# Change this value to a valid SSH Key Pair present in your AWS Account.

sshKeyName: default

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Cluster

metadata:

name: capi-quickstart

spec:

clusterNetwork:

pods:

cidrBlocks:

- 192.168.0.0/16

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AzureCluster

name: capi-quickstart

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AzureCluster

metadata:

name: capi-quickstart

spec:

# Change this value to the region you want to deploy the cluster in.

location: southcentralus

networkSpec:

vnet:

name: capi-quickstart-vnet

# Change this value to the resource group you want to deploy the cluster in.

resourceGroup: capi-quickstart

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Cluster

metadata:

name: capi-quickstart

spec:

clusterNetwork:

pods:

cidrBlocks: ["192.168.0.0/16"]

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: DockerCluster

name: capi-quickstart

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: DockerCluster

metadata:

name: capi-quickstart

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Cluster

metadata:

name: capi-quickstart

spec:

clusterNetwork:

pods:

cidrBlocks: ["192.168.0.0/16"] # CIDR block used by Calico.

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: VSphereCluster

name: capi-quickstart

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: VSphereCluster

metadata:

name: capi-quickstart

spec:

cloudProviderConfiguration:

global:

insecure: true

secretName: cloud-provider-vsphere-credentials

secretNamespace: kube-system

network:

name: vm-network-1

providerConfig:

cloud:

controllerImage: gcr.io/cloud-provider-vsphere/cpi/release/manager:v1.0.0

storage:

attacherImage: quay.io/k8scsi/csi-attacher:v1.1.1

controllerImage: gcr.io/cloud-provider-vsphere/csi/release/driver:v1.0.1

livenessProbeImage: quay.io/k8scsi/livenessprobe:v1.1.0

metadataSyncerImage: gcr.io/cloud-provider-vsphere/csi/release/syncer:v1.0.1

nodeDriverImage: gcr.io/cloud-provider-vsphere/csi/release/driver:v1.0.1

provisionerImage: quay.io/k8scsi/csi-provisioner:v1.2.1

registrarImage: quay.io/k8scsi/csi-node-driver-registrar:v1.1.0

virtualCenter:

10.0.0.1:

datacenters: SDDC-Datacenter

workspace:

datacenter: SDDC-Datacenter

datastore: DefaultDatastore

folder: vm

resourcePool: '*/Resources'

server: 10.0.0.1

server: 10.0.0.1

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Cluster

metadata:

name: capi-quickstart

spec:

clusterNetwork:

services:

cidrBlocks: ["10.96.0.0/12"]

pods:

cidrBlocks: ["192.168.0.0/16"] # CIDR block used by Calico.

serviceDomain: "cluster.local"

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: OpenStackCluster

name: capi-quickstart

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: OpenStackCluster

metadata:

name: capi-quickstart

spec:

cloudName: ${OPENSTACK_CLOUD}

cloudsSecret:

name: cloud-config

nodeCidr: ${NODE_CIDR}

externalNetworkId: ${OPENSTACK_EXTERNAL_NETWORK_ID}

disablePortSecurity: true

disableServerTags: true

---

apiVersion: v1

kind: Secret

metadata:

name: cloud-config

type: Opaque

data:

# This file has to be in the regular OpenStack cloud.yaml format

clouds.yaml: ${OPENSTACK_CLOUD_CONFIG_B64ENCODED}

cacert: ${OPENSTACK_CLOUD_CACERT_B64ENCODED}

Now that we’ve created the cluster object, we can create a control plane Machine.

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Machine

metadata:

name: capi-quickstart-controlplane-0

labels:

cluster.x-k8s.io/control-plane: "true"

cluster.x-k8s.io/cluster-name: "capi-quickstart"

spec:

version: v1.15.3

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

name: capi-quickstart-controlplane-0

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AWSMachine

name: capi-quickstart-controlplane-0

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AWSMachine

metadata:

name: capi-quickstart-controlplane-0

spec:

instanceType: t3.large

# This IAM profile is part of the pre-requisites.

iamInstanceProfile: "control-plane.cluster-api-provider-aws.sigs.k8s.io"

# Change this value to a valid SSH Key Pair present in your AWS Account.

sshKeyName: default

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

metadata:

name: capi-quickstart-controlplane-0

spec:

# For more information about these values,

# refer to the Kubeadm Bootstrap Provider documentation.

initConfiguration:

nodeRegistration:

name: '{{ ds.meta_data.hostname }}'

kubeletExtraArgs:

cloud-provider: aws

clusterConfiguration:

apiServer:

extraArgs:

cloud-provider: aws

controllerManager:

extraArgs:

cloud-provider: aws

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Machine

metadata:

name: capi-quickstart-controlplane-0

labels:

cluster.x-k8s.io/control-plane: "true"

cluster.x-k8s.io/cluster-name: "capi-quickstart"

spec:

version: v1.16.6

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

name: capi-quickstart-controlplane-0

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AzureMachine

name: capi-quickstart-controlplane-0

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AzureMachine

metadata:

name: capi-quickstart-controlplane-0

spec:

location: ${AZURE_LOCATION}

osDisk:

diskSizeGB: 30

managedDisk:

storageAccountType: Premium_LRS

osType: Linux

sshPublicKey: ${SSH_PUBLIC_KEY}

vmSize: Standard_B2ms

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

metadata:

name: capi-quickstart-controlplane-0

spec:

# For more information about these values,

# refer to the Kubeadm Bootstrap Provider documentation.

clusterConfiguration:

apiServer:

extraArgs:

cloud-config: /etc/kubernetes/azure.json

cloud-provider: azure

extraVolumes:

- hostPath: /etc/kubernetes/azure.json

mountPath: /etc/kubernetes/azure.json

name: cloud-config

readOnly: true

timeoutForControlPlane: 20m

controllerManager:

extraArgs:

allocate-node-cidrs: "false"

cloud-config: /etc/kubernetes/azure.json

cloud-provider: azure

extraVolumes:

- hostPath: /etc/kubernetes/azure.json

mountPath: /etc/kubernetes/azure.json

name: cloud-config

readOnly: true

files:

- content: |

{

"cloud": "AzurePublicCloud",

"tenantId": "${AZURE_TENANT_ID}",

"subscriptionId": "${AZURE_SUBSCRIPTION_ID}",

"aadClientId": "${AZURE_CLIENT_ID}",

"aadClientSecret": "${AZURE_CLIENT_SECRET}",

"resourceGroup": "capi-quickstart",

"securityGroupName": "capi-quickstart-controlplane-nsg",

"location": "${AZURE_LOCATION}",

"vmType": "standard",

"vnetName": "capi-quickstart",

"vnetResourceGroup": "capi-quickstart",

"subnetName": "capi-quickstart-controlplane-subnet",

"routeTableName": "capi-quickstart-node-routetable",

"userAssignedID": "capi-quickstart",

"loadBalancerSku": "standard",

"maximumLoadBalancerRuleCount": 250,

"useManagedIdentityExtension": false,

"useInstanceMetadata": true

}

owner: root:root

path: /etc/kubernetes/azure.json

permissions: "0644"

initConfiguration:

nodeRegistration:

kubeletExtraArgs:

cloud-config: /etc/kubernetes/azure.json

cloud-provider: azure

name: '{{ ds.meta_data["local_hostname"] }}'

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Machine

metadata:

name: capi-quickstart-controlplane-0

labels:

cluster.x-k8s.io/control-plane: "true"

cluster.x-k8s.io/cluster-name: "capi-quickstart"

spec:

version: v1.15.3

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

name: capi-quickstart-controlplane-0

infrastructureRef:

kind: DockerMachine

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

name: capi-quickstart-controlplane-0

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: DockerMachine

metadata:

name: capi-quickstart-controlplane-0

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

metadata:

name: capi-quickstart-controlplane-0

spec:

initConfiguration:

nodeRegistration:

kubeletExtraArgs:

# Default thresholds are higher to provide a buffer before resources

# are completely depleted, at the cost of requiring more total

# resources. These low thresholds allow running with fewer resources.

# Appropriate for testing or development only.

eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0%

clusterConfiguration:

controllerManager:

extraArgs:

# Enables dynamic storage provisioning without a cloud provider.

# Appropriate for testing or development only.

enable-hostpath-provisioner: "true"

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Machine

metadata:

name: capi-quickstart-controlplane-0

labels:

cluster.x-k8s.io/control-plane: "true"

cluster.x-k8s.io/cluster-name: "capi-quickstart"

spec:

version: v1.16.2

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

name: capi-quickstart-controlplane-0

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: VSphereMachine

name: capi-quickstart-controlplane-0

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: VSphereMachine

metadata:

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

cluster.x-k8s.io/control-plane: "true"

name: capi-quickstart-controlplane-0

namespace: default

spec:

datacenter: SDDC-Datacenter

diskGiB: 50

memoryMiB: 2048

network:

devices:

- dhcp4: true

dhcp6: false

networkName: vm-network-1

numCPUs: 2

template: ubuntu-1804-kube-v1.16.2

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

metadata:

name: capi-quickstart-controlplane-0

namespace: default

spec:

clusterConfiguration:

apiServer:

extraArgs:

cloud-provider: external

controllerManager:

extraArgs:

cloud-provider: external

imageRepository: k8s.gcr.io

initConfiguration:

nodeRegistration:

criSocket: /var/run/containerd/containerd.sock

kubeletExtraArgs:

cloud-provider: external

name: '{{ ds.meta_data.hostname }}'

preKubeadmCommands:

- hostname "{{ ds.meta_data.hostname }}"

- echo "::1 ipv6-localhost ipv6-loopback" >/etc/hosts

- echo "127.0.0.1 localhost {{ ds.meta_data.hostname }}" >>/etc/hosts

- echo "{{ ds.meta_data.hostname }}" >/etc/hostname

apiVersion: cluster.x-k8s.io/v1alpha2

kind: Machine

metadata:

name: capi-quickstart-controlplane-0

labels:

cluster.x-k8s.io/control-plane: "true"

cluster.x-k8s.io/cluster-name: "capi-quickstart"

spec:

version: v1.15.3

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

name: capi-quickstart-controlplane-0

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: OpenStackMachine

name: capi-quickstart-controlplane-0

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: OpenStackMachine

metadata:

name: capi-quickstart-controlplane-0

spec:

flavor: m1.medium

image: ${IMAGE_NAME}

availabilityZone: nova

floatingIP: ${FLOATING_IP}

cloudName: ${OPENSTACK_CLOUD}

cloudsSecret:

name: cloud-config

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfig

metadata:

name: capi-quickstart-controlplane-0

spec:

# For more information about these values,

# refer to the Kubeadm Bootstrap Provider documentation.

initConfiguration:

localAPIEndpoint:

advertiseAddress: '{{ ds.ec2_metadata.local_ipv4 }}'

bindPort: 6443

nodeRegistration:

name: '{{ local_hostname }}'

criSocket: "/var/run/containerd/containerd.sock"

kubeletExtraArgs:

cloud-provider: openstack

cloud-config: /etc/kubernetes/cloud.conf

clusterConfiguration:

controlPlaneEndpoint: "${FLOATING_IP}:6443"

imageRepository: k8s.gcr.io

apiServer:

extraArgs:

cloud-provider: openstack

cloud-config: /etc/kubernetes/cloud.conf

extraVolumes:

- name: cloud

hostPath: /etc/kubernetes/cloud.conf

mountPath: /etc/kubernetes/cloud.conf

readOnly: true

controllerManager:

extraArgs:

cloud-provider: openstack

cloud-config: /etc/kubernetes/cloud.conf

extraVolumes:

- name: cloud

hostPath: /etc/kubernetes/cloud.conf

mountPath: /etc/kubernetes/cloud.conf

readOnly: true

- name: cacerts

hostPath: /etc/certs/cacert

mountPath: /etc/certs/cacert

readOnly: true

files:

- path: /etc/kubernetes/cloud.conf

owner: root

permissions: "0600"

encoding: base64

# This file has to be in the format of the

# OpenStack cloud provider

content: |-

${OPENSTACK_CLOUD_CONFIG_B64ENCODED}

- path: /etc/certs/cacert

owner: root

permissions: "0600"

content: |

${OPENSTACK_CLOUD_CACERT_B64ENCODED}

users:

- name: capo

sudo: "ALL=(ALL) NOPASSWD:ALL"

sshAuthorizedKeys:

- "${SSH_AUTHORIZED_KEY}"

To verify the control plane is up, check if the control plane machine has a ProviderID.

kubectl get machines --selector cluster.x-k8s.io/control-plane

After the controlplane is up, we can retrieve the workload cluster Kubeconfig:

kubectl --namespace=default get secret/capi-quickstart-kubeconfig -o json \

| jq -r .data.value \

| base64 --decode \

> ./capi-quickstart.kubeconfig

kubectl --namespace=default get secret/capi-quickstart-kubeconfig -o json \

| jq -r .data.value \

| base64 --decode \

> ./capi-quickstart.kubeconfig

kubectl --namespace=default get secret/capi-quickstart-kubeconfig -o json \

| jq -r .data.value \

| base64 --decode \

> ./capi-quickstart.kubeconfig

When using docker-for-mac MacOS, you will need to do a couple of additional steps to get the correct kubeconfig:

# Point the kubeconfig to the exposed port of the load balancer, rather than the inaccessible container IP.

sed -i -e "s/server:.*/server: https:\/\/$(docker port capi-quickstart-lb 6443/tcp | sed "s/0.0.0.0/127.0.0.1/")/g" ./capi-quickstart.kubeconfig

# Ignore the CA, because it is not signed for 127.0.0.1

sed -i -e "s/certificate-authority-data:.*/insecure-skip-tls-verify: true/g" ./capi-quickstart.kubeconfig

kubectl --namespace=default get secret/capi-quickstart-kubeconfig -o json \

| jq -r .data.value \

| base64 --decode \

> ./capi-quickstart.kubeconfig

kubectl --namespace=default get secret/capi-quickstart-kubeconfig -o json \

| jq -r .data.value \

| base64 --decode \

> ./capi-quickstart.kubeconfig

Deploy a CNI solution, Calico is used here as an example.

kubectl --kubeconfig=./capi-quickstart.kubeconfig \

apply -f https://docs.projectcalico.org/v3.8/manifests/calico.yaml

After a short while, our control plane should be running and in Ready state,

let’s check the status using kubectl get nodes:

kubectl --kubeconfig=./capi-quickstart.kubeconfig get nodes

Finishing up, we’ll create a single node MachineDeployment.

apiVersion: cluster.x-k8s.io/v1alpha2

kind: MachineDeployment

metadata:

name: capi-quickstart-worker

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

# Labels beyond this point are for example purposes,

# feel free to add more or change with something more meaningful.

# Sync these values with spec.selector.matchLabels and spec.template.metadata.labels.

nodepool: nodepool-0

spec:

replicas: 1

selector:

matchLabels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

template:

metadata:

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

spec:

version: v1.15.3

bootstrap:

configRef:

name: capi-quickstart-worker

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

infrastructureRef:

name: capi-quickstart-worker

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AWSMachineTemplate

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AWSMachineTemplate

metadata:

name: capi-quickstart-worker

spec:

template:

spec:

instanceType: t3.large

# This IAM profile is part of the pre-requisites.

iamInstanceProfile: "nodes.cluster-api-provider-aws.sigs.k8s.io"

# Change this value to a valid SSH Key Pair present in your AWS Account.

sshKeyName: default

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

metadata:

name: capi-quickstart-worker

spec:

template:

spec:

# For more information about these values,

# refer to the Kubeadm Bootstrap Provider documentation.

joinConfiguration:

nodeRegistration:

name: '{{ ds.meta_data.hostname }}'

kubeletExtraArgs:

cloud-provider: aws

apiVersion: cluster.x-k8s.io/v1alpha2

kind: MachineDeployment

metadata:

name: capi-quickstart-node

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

# Labels beyond this point are for example purposes,

# feel free to add more or change with something more meaningful.

# Sync these values with spec.selector.matchLabels and spec.template.metadata.labels.

nodepool: nodepool-0

spec:

replicas: 1

selector:

matchLabels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

template:

metadata:

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

spec:

version: v1.16.6

bootstrap:

configRef:

name: capi-quickstart-node

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

infrastructureRef:

name: capi-quickstart-node

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AzureMachineTemplate

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: AzureMachineTemplate

metadata:

name: capi-quickstart-node

spec:

template:

spec:

location: ${AZURE_LOCATION}

vmSize: Standard_B2ms

osDisk:

osType: "Linux"

diskSizeGB: 30

managedDisk:

storageAccountType: "Premium_LRS"

sshPublicKey: ${SSH_PUBLIC_KEY}

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

metadata:

name: capi-quickstart-node

spec:

template:

spec:

joinConfiguration:

nodeRegistration:

name: '{{ ds.meta_data["local_hostname"] }}'

kubeletExtraArgs:

cloud-provider: azure

cloud-config: /etc/kubernetes/azure.json

files:

- path: /etc/kubernetes/azure.json

owner: "root:root"

permissions: "0644"

content: |

{

"cloud": "AzurePublicCloud",

"tenantId": "${AZURE_TENANT_ID}",

"subscriptionId": "${AZURE_SUBSCRIPTION_ID}",

"aadClientId": "${AZURE_CLIENT_ID}",

"aadClientSecret": "${AZURE_CLIENT_SECRET}",

"resourceGroup": "capi-quickstart",

"securityGroupName": "capi-quickstart-controlplane-nsg",

"location": "${AZURE_LOCATION}",

"vmType": "standard",

"vnetName": "capi-quickstart",

"vnetResourceGroup": "capi-quickstart",

"subnetName": "capi-quickstart-controlplane-subnet",

"routeTableName": "capi-quickstart-node-routetable",

"userAssignedID": "capi-quickstart",

"loadBalancerSku": "standard",

"maximumLoadBalancerRuleCount": 250,

"useManagedIdentityExtension": false,

"useInstanceMetadata": true

}

apiVersion: cluster.x-k8s.io/v1alpha2

kind: MachineDeployment

metadata:

name: capi-quickstart-worker

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

# Labels beyond this point are for example purposes,

# feel free to add more or change with something more meaningful.

# Sync these values with spec.selector.matchLabels and spec.template.metadata.labels.

nodepool: nodepool-0

spec:

replicas: 1

selector:

matchLabels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

template:

metadata:

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

spec:

version: v1.15.3

bootstrap:

configRef:

name: capi-quickstart-worker

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

infrastructureRef:

name: capi-quickstart-worker

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: DockerMachineTemplate

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: DockerMachineTemplate

metadata:

name: capi-quickstart-worker

spec:

template:

spec: {}

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

metadata:

name: capi-quickstart-worker

spec:

template:

spec:

# For more information about these values,

# refer to the Kubeadm Bootstrap Provider documentation.

joinConfiguration:

nodeRegistration:

kubeletExtraArgs:

eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0%

clusterConfiguration:

controllerManager:

extraArgs:

enable-hostpath-provisioner: "true"

apiVersion: cluster.x-k8s.io/v1alpha2

kind: MachineDeployment

metadata:

name: capi-quickstart-worker

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

# Labels beyond this point are for example purposes,

# feel free to add more or change with something more meaningful.

# Sync these values with spec.selector.matchLabels and spec.template.metadata.labels.

nodepool: nodepool-0

spec:

replicas: 1

selector:

matchLabels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

template:

metadata:

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

spec:

version: v1.16.2

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

name: capi-quickstart-worker

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: VSphereMachineTemplate

name: capi-quickstart-worker

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: VSphereMachineTemplate

metadata:

name: capi-quickstart-md-0

namespace: default

spec:

template:

spec:

datacenter: SDDC-Datacenter

diskGiB: 50

memoryMiB: 2048

network:

devices:

- dhcp4: true

dhcp6: false

networkName: vm-network-1

numCPUs: 2

template: ubuntu-1804-kube-v1.16.2

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

metadata:

name: capi-quickstart-md-0

namespace: default

spec:

template:

spec:

joinConfiguration:

nodeRegistration:

criSocket: /var/run/containerd/containerd.sock

kubeletExtraArgs:

cloud-provider: external

name: '{{ ds.meta_data.hostname }}'

preKubeadmCommands:

- hostname "{{ ds.meta_data.hostname }}"

- echo "::1 ipv6-localhost ipv6-loopback" >/etc/hosts

- echo "127.0.0.1 localhost {{ ds.meta_data.hostname }}" >>/etc/hosts

- echo "{{ ds.meta_data.hostname }}" >/etc/hostname

apiVersion: cluster.x-k8s.io/v1alpha2

kind: MachineDeployment

metadata:

name: capi-quickstart-worker

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

# Labels beyond this point are for example purposes,

# feel free to add more or change with something more meaningful.

# Sync these values with spec.selector.matchLabels and spec.template.metadata.labels.

nodepool: nodepool-0

spec:

replicas: 1

selector:

matchLabels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

template:

metadata:

labels:

cluster.x-k8s.io/cluster-name: capi-quickstart

nodepool: nodepool-0

spec:

version: v1.15.3

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

name: capi-quickstart-worker

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: OpenStackMachineTemplate

name: capi-quickstart-worker

---

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

kind: OpenStackMachineTemplate

metadata:

name: capi-quickstart-worker

spec:

template:

spec:

availabilityZone: nova

cloudName: ${OPENSTACK_CLOUD}

cloudsSecret:

name: cloud-config

flavor: m1.medium

image: ${IMAGE_NAME}

---

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

kind: KubeadmConfigTemplate

metadata:

name: capi-quickstart-worker

spec:

template:

spec:

# For more information about these values,

# refer to the Kubeadm Bootstrap Provider documentation.

joinConfiguration:

nodeRegistration:

name: '{{ local_hostname }}'

criSocket: "/var/run/containerd/containerd.sock"

kubeletExtraArgs:

cloud-config: /etc/kubernetes/cloud.conf

cloud-provider: openstack

files:

- path: /etc/kubernetes/cloud.conf

owner: root

permissions: "0600"

encoding: base64

# This file has to be in the format of the

# OpenStack cloud provider

content: |-

${OPENSTACK_CLOUD_CONFIG_B64ENCODED}

- path: /etc/certs/cacert

owner: root

permissions: "0600"

content: |

${OPENSTACK_CLOUD_CACERT_B64ENCODED}

users:

- name: capo

sudo: "ALL=(ALL) NOPASSWD:ALL"

sshAuthorizedKeys:

- "${SSH_AUTHORIZED_KEY}"

Concepts

Management cluster

The cluster where one or more Infrastructure Providers run, and where resources (e.g. Machines) are stored. Typically referred to when you are provisioning multiple clusters.

Workload/Target Cluster

A cluster whose lifecycle is managed by the Management cluster.

Infrastructure provider

A source of computational resources (e.g. machines, networking, etc.). Examples for cloud include AWS, Azure, Google, etc.; for bare metal include VMware, MAAS, metal3.io, etc. When there is more than one way to obtain resources from the same infrastructure provider (e.g. EC2 vs. EKS) each way is referred to as a variant.

Bootstrap provider

The bootstrap provider is responsible for (usually by generating cloud-init or similar):

- Generating the cluster certificates, if not otherwise specified

- Initializing the control plane, and gating the creation of other nodes until it is complete

- Joining master and worker nodes to the cluster

Control plane

The control plane (sometimes referred to as master nodes) is a set of services that serve the Kubernetes API and reconcile desired state through the control-loops.

-

Machine Based based control planes are the most common type deployment model and is used by tools like kubeadm and kubespray. Dedicated machines are provisioned running static pods for the control plane components such as kube-apiserver, kube-controller-manager and kube-scheduler.

-

Pod Based deployments require an external hosting cluster, the control plane is deployed using standard Deployment and StatefulSet objects and then the API exposed using a Service.

-

External control planes are offered and controlled by some system other than Cluster API (e.g., GKE, AKS, EKS, IKS).

As of v1alpha2 Machine Based is the only supported Cluster API control plane type.

Custom Resource Definitions (CRDs)

Machine

A “Machine” is the declarative spec for a Node, as represented in Kuberenetes core. If a new Machine object is created, a provider-specific controller will handle provisioning and installing a new host to register as a new Node matching the Machine spec. If the Machine’s spec is updated, a provider-specific controller is responsible for updating the Node in-place or replacing the host with a new one matching the updated spec. If a Machine object is deleted, the corresponding Node should have its external resources released by the provider-specific controller, and should be deleted as well.

Fields like the kubelet version are modeled as fields on the Machine’s spec. Any other information that is provider-specific, though, is part of the InfraProviderRef and is not portable between different providers.

Machine Immutability (In-place Upgrade vs. Replace)

From the perspective of Cluster API all machines are immutable, once they are created they are never updated (except for maybe labels, annotations and status) - only deleted.

For this reason, it is recommended to use MachineDeployments which handles changes to machines by replacing them in the same way regular Deployments handle changes to the podSpec.

MachineDeployment

MachineDeployment work similar to regular POD Deployments reconciling changes to a machine spec by rolling out changes to 2 MachineSets, the old and newly updated.

MachineSet

MachineSets work similar to regulat POD ReplicaSets, MachineSets are not meant to be used directly, but are rather the mechanism MachineDeployments use to reconcile desired state.

BootstrapData

BootstrapData contains the machine or node role specific initialization data (usually cloud-init) used by the infrastructure provider to bootstrap a machine into a node.

Installation

Prerequisites

- Install and setup kubectl in your local environment.

- Install and/or configure a management cluster

Setup Management Cluster

Cluster API requires an existing kubernetes cluster accessible via kubectl, choose one of the options below:

- Kind

kind create cluster --name=clusterapi

kubectl cluster-info --context kind-clusterapi

Because the Docker provider needs to access Docker on the host, a custom kind cluster configuration is required:

cat > kind-cluster-with-extramounts.yaml <<EOF

kind: Cluster

apiVersion: kind.sigs.k8s.io/v1alpha3

nodes:

- role: control-plane

extraMounts:

- hostPath: /var/run/docker.sock

containerPath: /var/run/docker.sock

EOF

kind create cluster --config ./kind-cluster-with-extramounts.yaml --name clusterapi

kubectl cluster-info --context kind-clusterapi

- Existing Management Cluster

For production use-cases a “real” kubernetes cluster should be used with appropriate backup and DR policies and procedures in place.

export KUBECONFIG=<...>

- Pivoting

Pivoting is the process of taking an initial kind cluster to create a new workload cluster, and then converting the workload cluster into a management cluster by migrating the Cluster API CRD’s.

Installation

Using kubectl, create the components on the management cluster:

Install Cluster API

kubectl create -f https://github.com/kubernetes-sigs/cluster-api/releases/download/v0.2.11/cluster-api-components.yaml

Install the Bootstrap Provider

Check the Kubeadm provider releases for an up-to-date components file.

kubectl create -f https://github.com/kubernetes-sigs/cluster-api-bootstrap-provider-kubeadm/releases/download/v0.1.7/bootstrap-components.yaml

Install Infrastructure Provider

Install clusterawsadm

Download the latest binary of clusterawsadm from the AWS provider releases and make sure to place it in your path.

Create the components

Check the AWS provider releases for an up-to-date components file.

# Create the base64 encoded credentials using clusterawsadm.

# This command uses your environment variables and encodes

# them in a value to be stored in a Kubernetes Secret.

export AWS_B64ENCODED_CREDENTIALS=$(clusterawsadm alpha bootstrap encode-aws-credentials)

# Create the components.

curl -L https://github.com/kubernetes-sigs/cluster-api-provider-aws/releases/download/v0.4.10/infrastructure-components.yaml \

| envsubst \

| kubectl create -f -

Check the Azure provider releases for an up-to-date components file.

# Create the base64 encoded credentials

export AZURE_SUBSCRIPTION_ID_B64="$(echo -n "$AZURE_SUBSCRIPTION_ID" | base64 | tr -d '\n')"

export AZURE_TENANT_ID_B64="$(echo -n "$AZURE_TENANT_ID" | base64 | tr -d '\n')"

export AZURE_CLIENT_ID_B64="$(echo -n "$AZURE_CLIENT_ID" | base64 | tr -d '\n')"

export AZURE_CLIENT_SECRET_B64="$(echo -n "$AZURE_CLIENT_SECRET" | base64 | tr -d '\n')"

curl -L https://github.com/kubernetes-sigs/cluster-api-provider-azure/releases/download/v0.3.1/infrastructure-components.yaml \

| envsubst \

| kubectl create -f -

Check the Docker provider releases for an up-to-date components file.

kubectl create -f https://github.com/kubernetes-sigs/cluster-api-provider-docker/releases/download/v0.2.1/provider-components.yaml

It is required to use an official CAPV machine image for your vSphere VM templates. See Uploading CAPV Machine Images for instructions on how to do this.

# Upload vCenter credentials as a Kubernetes secret

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Namespace

metadata:

labels:

control-plane: controller-manager

name: capv-system

---

apiVersion: v1

kind: Secret

metadata:

name: capv-manager-bootstrap-credentials

namespace: capv-system

type: Opaque

data:

username: "<my vCenter username>"

password: "<my vCenter password>"

EOF

$ kubectl create -f https://github.com/kubernetes-sigs/cluster-api-provider-vsphere/releases/download/v0.5.5/infrastructure-components.yaml

Check the vSphere provider releases for an up-to-date components file.

For more information about prerequisites, credentials management, or permissions for vSphere, visit the getting started guide.

Check the OpenStack provider releases for an up-to-date components file.

For more detailed information, e.g. about prerequisites visit the getting started guide.

kubectl create -f https://github.com/kubernetes-sigs/cluster-api-provider-openstack/releases/download/v0.2.0/infrastructure-components.yaml

Using Custom Certificates

Cluster API expects certificates and keys used for bootstrapping to follow the below convention. CAPBK generates new certificates using this convention if they do not already exist.

Each certificate must be stored in a single secret named one of:

| Name | Type | Example |

|---|---|---|

| [cluster name]-ca | CA | openssl req -x509 -subj “/CN=Kubernetes API” -new -newkey rsa:2048 -nodes -keyout tls.key -sha256 -days 3650 -out tls.crt |

| [cluster name]-etcd | CA | openssl req -x509 -subj “/CN=ETCD CA” -new -newkey rsa:2048 -nodes -keyout tls.key -sha256 -days 3650 -out tls.crt |

| [cluster name]-proxy | CA | openssl req -x509 -subj “/CN=Front-End Proxy” -new -newkey rsa:2048 -nodes -keyout tls.key -sha256 -days 3650 -out tls.crt |

| [cluster name]-sa | Key Pair | openssl genrsa -out tls.key 2048 && openssl rsa -in tls.key -pubout -out tls.crt |

Example

apiVersion: v1

kind: Secret

metadata:

name: cluster1-ca

type: kubernetes.io/tls

data:

tls.crt: <base 64 encoded PEM>

tls.key: <base 64 encoded PEM>

Generating a Kubeconfig with your own CA

- Create a new Certificate Signing Request (CSR) for the

system:mastersKubernetes role, or specify any other role under CN.

openssl req -subj "/CN=system:masters" -new -newkey rsa:2048 -nodes -out admin.csr -keyout admin.key -out admin.csr

- Sign the CSR using the [cluster-name]-ca key:

openssl x509 -req -in admin.csr -CA tls.crt -CAkey tls.key -CAcreateserial -out admin.crt -days 5 -sha256

- Update your kubeconfig with the sign key:

kubectl config set-credentials cluster-admin --client-certificate=admin.crt --client-key=admin.key --embed-certs=true

Developer Guide

This page is still being written - stay tuned!

Repository Layout

This page is still being written - stay tuned!

Developing Cluster API with Tilt

Overview

This document describes how to use kind and Tilt for a simplified workflow that offers easy deployments and rapid iterative builds.

Prerequisites

- Docker

- kind v0.6 or newer

(other clusters can be used if

preload_images_for_kindis set to false) - kustomize standalone

(

kubectl kustomizedoes not work because it is missing some features of kustomize v3) - Tilt

- Clone the Cluster API repository locally

- Clone the provider(s) you want to deploy locally as well

Getting started

Create a kind cluster

First, make sure you have a kind cluster and that your KUBECONFIG is set up correctly:

$ kind create cluster

Create a tilt-settings.json file

Next, create a tilt-settings.json file and place it in your local copy of cluster-api. Here is an example:

{

"allowed_contexts": ["kind-kind"],

"default_registry": "gcr.io/your-project-name-here",

"provider_repos": ["../cluster-api-provider-aws"],

"enable_providers": ["aws", "docker"]

}

tilt-settings.json fields

allowed_contexts (Array, default=[]): A list of kubeconfig contexts Tilt is allowed to use. See the Tilt documentation on *allow_k8s_contexts for more details.

default_registry (String, default=””): The image registry to use if you need to push images. See the Tilt *documentation for more details.

provider_repos (Array[]String, default=[]): A list of paths to all the providers you want to use. Each provider must have a

tilt-provider.json file describing how to build the provider.

enable_providers (Array[]String, default=[‘docker’]): A list of the providers to enable. See available providers for more details.

kustomize_substitutions (Map{String: String}, default={}): An optional map of substitutions for ${}-style placeholders in the

provider’s yaml.

For example, if the yaml contains ${AWS_B64ENCODED_CREDENTIALS}, you could do the following:

"kustomize_substitutions": {

"AWS_B64ENCODED_CREDENTIALS": "your credentials here"

}

You can generate a base64 version of your GCP json credentials file using:

base64 -i ~/path/to/gcp/credentials.json

"kustomize_substitutions": {

"GCP_B64ENCODED_CREDENTIALS": "your credentials here"

}

Run Tilt!

To launch your development environment, run

tilt up

This will open the command-line HUD as well as a web browser interface. You can monitor Tilt’s status in either location. After a brief amount of time, you should have a running development environment, and you should now be able to create a cluster. Please see the Usage section in the Quick Start for more information on creating workload clusters.

Available providers

The following providers are currently defined in the Tiltfile:

- core: cluster-api itself (Cluster/Machine/MachineDeployment/MachineSet/KubeadmConfig/KubeadmControlPlane)

- docker: Docker provider (DockerCluster/DockerMachine)

tilt-provider.json

A provider must supply a tilt-provider.json file describing how to build it. Here is an example:

{

"name": "aws",

"config": {

"image": "gcr.io/k8s-staging-cluster-api-aws/cluster-api-aws-controller",

"live_reload_deps": [

"main.go", "go.mod", "go.sum", "api", "cmd", "controllers", "pkg"

]

}

}

config fields

image: the image for this provider, as referenced in the kustomize files. This must match; otherwise, Tilt won’t build it.

live_reload_deps: a list of files/directories to watch. If any of them changes, Tilt rebuilds the manager binary for the provider and performs a live update of the running container.

additional_docker_helper_commands (String, default=””): Additional commands to be run in the helper image docker build. e.g.

RUN wget -qO- https://dl.k8s.io/v1.14.4/kubernetes-client-linux-amd64.tar.gz | tar xvz

RUN wget -qO- https://get.docker.com | sh

additional_docker_build_commands (String, default=””): Additional commands to be appended to

the dockerfile.

The manager image will use docker-slim, so to download files, use additional_helper_image_commands. e.g.

COPY --from=tilt-helper /usr/bin/docker /usr/bin/docker

COPY --from=tilt-helper /go/kubernetes/client/bin/kubectl /usr/bin/kubectl

Customizing Tilt

If you need to customize Tilt’s behavior, you can create files in cluster-api’s tilt.d directory. This file is ignored

by git so you can be assured that any files you place here will never be checked in to source control.

These files are included after the providers map has been defined and after all the helper function definitions. This

is immediately before the “real work” happens.

Under the covers, a.k.a “the real work”

At a high level, the Tiltfile performs the following actions:

- Read

tilt-settings.json - Configure the allowed Kubernetes contexts

- Set the default registry

- Define the

providersmap - Include user-defined Tilt files

- Enable providers (

core+ what is listed intilt-settings.json)- Build the manager binary locally as a

local_resource - Invoke

docker_buildfor the provider - Invoke

kustomizefor the provider’sconfig/defaultdirectory

- Build the manager binary locally as a

Live updates

Each provider in the providers map has a live_reload_deps list. This defines the files and/or directories that Tilt

should monitor for changes. When a dependency is modified, Tilt rebuilds the provider’s manager binary on your local

machine, copies the binary to the running container, and executes a restart script. This is significantly faster

than rebuilding the container image for each change. It also helps keep the size of each development image as small as

possible (the container images do not need the entire go toolchain, source code, module dependencies, etc.).

Controllers

This page is still being written - stay tuned!

Bootstrap Controller

Bootstrapping is the process in which:

- A cluster is bootstrapped

- A machine is bootstrapped and takes on a role within a cluster

CAPBK is the reference bootstrap provider and is based on kubeadm. CAPBK codifies the steps for creating a cluster in multiple configurations.

See proposal for the full details on how the bootstrap process works.

Implementations

- Kubeadm (Reference Implementation)

Cluster Controller

The Cluster controller’s main responsibilities are:

- Setting an OwnerReference on the infrastructure object referenced in

Cluster.Spec.InfrastructureRef. - Cleanup of all owned objects so that nothing is dangling after deletion.

- Keeping the Cluster’s status in sync with the infrastructure Cluster’s status.

- Creating a kubeconfig secret for workload clusters.

Contracts

Infrastructure Provider

The general expectation of an infrastructure provider is to provision the necessary infrastructure components needed to run a Kubernetes cluster. As an example, the AWS infrastructure provider, specifically the AWSCluster reconciler, will provision a VPC, some security groups, an ELB, a bastion instance and some other components all with AWS best practices baked in. Once that infrastructure is provisioned and ready to be used the AWSMachine reconciler takes over and provisions EC2 instances that will become a Kubernetes cluster through some bootstrap mechanism.

Required status fields

The InfrastructureCluster object must have a status object.

The status object must have several fields defined:

ready- a boolean field that is true when the infrastructure is ready to be used.apiEndpoints- a slice of strings that identifies each control plane node’s apiserver endpoint or a slice with only one endpoint that is a load balancer for all control plane nodes.

Optional status fields

The status object may define several fields that do not affect functionality if missing:

errorReason- is a string that explains why an error has occurred, if possible.errorMessage- is a string that holds the message contained by the error.

Example:

kind: MyProviderCluster

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

status:

ready: true

apiEndpoints:

- host: example.com

port: 3333

- host: example.com

port: 3334

Secrets

If you are using the kubeadm bootstrap provider you do not have to provide Cluster API any secrets. It will generate all necessary CAs (certificate authorities) for you.

However, if you provide a CA for the cluster then Cluster API will be able to generate a kubeconfig secret. This is useful if you have a custom CA for or do not want to use the bootstrap provider’s generated self-signed CA.

| Secret name | Field name | Content |

|---|---|---|

<cluster-name>-ca | tls.crt | base64 encoded TLS certificate in PEM format |

<cluster-name>-ca | tls.key | base64 encoded TLS private key in PEM format |

Alternatively can entirely bypass Cluster API generating a kubeconfig entirely if you provide a kubeconfig secret formatted as described below.

| Secret name | Field name | Content |

|---|---|---|

<cluster-name>-kubeconfig | value | base64 encoded kubeconfig |

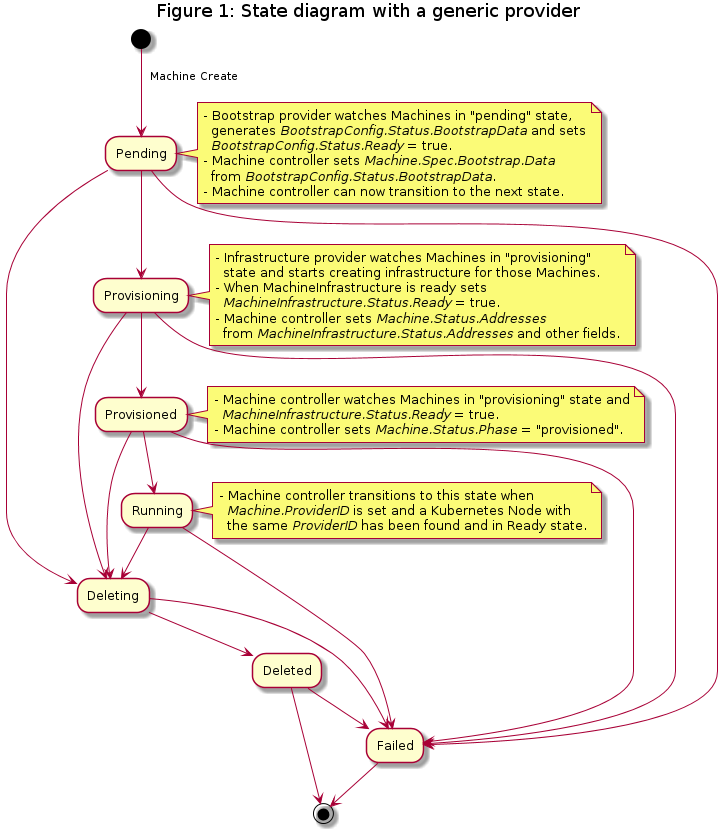

Machine Controller

The Machine controller’s main responsibilities are:

- Setting an OwnerReference on:

- Each Machine object to the Cluster object.

- The associated BootstrapConfig object.

- The associated InfrastructureMachine object.

- Copy data from

BootstrapConfig.Status.BootstrapDatatoMachine.Spec.Bootstrap.DataifMachine.Spec.Bootstrap.Datais empty. - Setting NodeRefs to be able to associate machines and kubernetes nodes.

- Deleting Nodes in the target cluster when the associated machine is deleted.

- Cleanup of related objects.

- Keeping the Machine’s Status object up to date with the InfrastructureMachine’s Status object.

Contracts

Cluster API

Cluster associations are made via labels.

Expected labels

| what | label | value | meaning |

|---|---|---|---|

| Machine | cluster.x-k8s.io/cluster-name | <cluster-name> | Identify a machine as belonging to a cluster with the name <cluster-name> |

| Machine | cluster.x-k8s.io/control-plane | true | Identifies a machine as a control-plane node |

Bootstrap provider

The BootstrapConfig object must have a status object.

To override the bootstrap provider, a user (or external system) can directly set the Machine.Spec.Bootstrap.Data

field. This will mark the machine as ready for bootstrapping and no bootstrap data will be copied from the

BootstrapConfig object.

Required status fields

The status object must have several fields defined:

ready- a boolean field indicating the bootstrap config data is ready for use.bootstrapData- A string field containing some data used for bootstrapping a cluster.addresses- A slice of addresses ([]v1.NodeAddress) that contains a list of apiserver endpoints.

Optional status fields

The status object may define several fields that do not affect functionality if missing:

errorReason- is a string that explains why an error has occurred, if possible.errorMessage- is a string that holds the message contained by the error.

Example:

kind: MyBootstrapProviderConfig

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha2

status:

ready: true

bootstrapData: "kubeadm init"

addresses:

- type: Hostname

address: controlplane.example.com

Infrastructure provider

The InfrastructureMachine object must have a status object.

Required status fields

The status object must have several fields defined:

ready- a boolean field indicating if the infrastructure is ready to be used or not.providerID- a cloud provider ID identifying the machine. This is often set by the cloud-provider-controller.

Optional status fields

The status object may define several fields that do not affect functionality if missing:

errorReason- is a string that explains why an error has occurred, if possible.errorMessage- is a string that holds the message contained by the error.

Example:

kind: MyMachine

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2

status:

ready: true

providerID: cloud:////my-cloud-provider-id

Secrets

The Machine controller will create a secret or use an existing secret in the following format:

| secret name | field name | content |

|---|---|---|

<cluster-name>-kubeconfig | value | base64 encoded kubeconfig that is authenticated with the child cluster |

MachineSet

This page is still being written - stay tuned!

MachineDeployment

This page is still being written - stay tuned!

Node Controller

Provider Implementers

Updating a v1alpha1 provider to a v1alpha2 infrastructure provider

This document outlines how to update a cluster API (CAPI) v1alpha1 provider to a v1alpha2 infrastructure provider.

Table of contents

General information

This section contains several general notes about the update process.

Provider types

This section reviews the changes to the provider types from v1alpha1 to v1alpha2.

Providers in v1alpha1

Providers in v1alpha1 wrap behavior specific to an environment to create the infrastructure and bootstrap instances into Kubernetes nodes. Examples of environments that have integrated with Cluster API v1alpha1 include, AWS, GCP, OpenStack, Azure, vSphere and others. The provider vendors in Cluster API’s controllers, registers its own actuators with the Cluster API controllers and runs a single manager to complete a Cluster API management cluster.

Providers in v1alpha2

v1alpha2 introduces two new providers and changes how the Cluster API is consumed. This means that in order to have a complete management cluster that is ready to build clusters you will need three managers.

- Core (Cluster API)

- Bootstrap (kubeadm)

- Infrastructure (aws, gcp, azure, vsphere, etc)

Cluster API’s controllers are no longer vendored by providers. Instead, Cluster API offers its own independent controllers that are responsible for the core types:

- Cluster

- Machine

- MachineSet

- MachineDeployment

Bootstrap providers are an entirely new concept aimed at reducing the amount of kubeadm boilerplate that every provider reimplemented in v1alpha1. The Bootstrap provider is responsible for running a controller that generates data necessary to bootstrap an instance into a Kubernetes node (cloud-init, bash, etc).

v1alpha1 “providers” have become Infrastructure providers. The Infrastructure provider is responsible for generating actual infrastructure (networking, load balancers, instances, etc) for a particular environment that can consume bootstrap data to turn the infrastructure into a Kubernetes cluster.

clusterctl

The clusterctl program is also handled differently in v1alpha2.

clusterctl in v1alpha1

clusterctl was a command line tool packaged with v1alpha1 providers. The goal of this tool was to go from nothing to a

running management cluster in whatever environment the provider was built for. For example, Cluster-API-Provider-AWS

packaged a clusterctl that created a Kubernetes cluster in EC2 and installed the necessary controllers to respond to

Cluster API’s APIs.

clusterctl in v1alpha2

clusterctl is likely becoming provider-agnostic meaning one clusterctl is bundled with Cluster API and can be reused

across providers. Work here is still being figured out but providers will not be packaging their own clusterctl

anymore.

The new API groups

This section describes the API groups used by CAPI v1alpha2:

| Group | Description |

|---|---|

cluster.x-k8s.io | The root CAPI API group |

infrastructure.cluster.x-k8s.io | The API group for all resources related to CAPI infrastructure providers |

bootstrap.cluster.x-k8s.io | The API group for all resources related to CAPI bootstrap providers |

Only SIG-sponsored providers may declare their components or resources to belong to any API group that ends with x-k8s.io.

Externally owned providers should use an appropriate API group for their ownership and would require additional RBAC rules to be configured and deployed for the common cluster-api components.

Kubebuilder

While kubebuilder v2 is available, the recommended approach for updating a CAPI provider to v1alpha2 is to stick with kubebuilder v1 during the update process and then reevaluate kubebuilder v2 after a successful migration to CAPI v1alpha2.

Please note if webhooks are required, it may necessitate migrating to kubebuilder v2 as part of the initial migration.

Additionally, kubebuilder v2 includes a lot of changes apart from just code, ex. the Makefile. If moving to v2 as part of this migration, please take a moment to read the kubebuilder book to understand all the differences between v1 and v2.

Sample code and other examples

This document uses the CAPI provider for AWS (CAPA) for sample code and other examples.

Create a branch for new v1alpha1 work

This document assumes the work required to update a provider to v1alpha2 will occur on the project’s master branch. Therefore, the recommendation is to create a branch release-MAJOR.MINOR in the repository from the latest v1alpha1-based release. For example, if the latest release of a provider based on CAPI v1alpha1 was v0.4.1 then the branch release-0.4 should be created. Now the project’s master branch is free to be a target for the work required to update the provider to v1alpha2, and fixes or backported features for the v1alpha1 version of the provider may target the release-0.4 branch.

Update the API group in the PROJECT file

Please update the PROJECT file at the root of the provider’s repository to reflect the API group cluster.x-k8s.io:

version: "1"

domain: cluster.x-k8s.io

repo: sigs.k8s.io/cluster-api-provider-aws

Create the provider’s v1alpha2 resources

The new v1alpha2 types are located in pkg/apis/infrastructure/v1alpha2.

The cluster and machine resources

Providers no longer store configuration and status data for clusters and machines in the CAPI Cluster and Machine resources. Instead, this information is stored in two, new, provider-specific CRDs:

pkg/apis/infrastructure/v1alpha2.ProviderClusterpkg/apis/infrastructure/v1alpha2.ProviderMachine

For example, the AWS provider defines:

sigs.k8s.io/cluster-api-provider-aws/pkg/apis/infrastructure/v1alpha2.AWSClustersigs.k8s.io/cluster-api-provider-aws/pkg/apis/infrastructure/v1alpha2.AWSMachine

The spec and status types

The Spec and Status types used to store configuration and status information are effectively the same in v1alpha2 as they were in v1alpha1:

Information related to kubeadm or certificates has been extracted and is now owned by the bootstrap provider and its corresponding resources, ex. KubeadmConfig.

Infrastructure provider cluster status fields

A CAPI v1alpha2 provider cluster status resource has two special fields, ready and apiEndpoints. For example, take the AWSClusterStatus:

// AWSClusterStatus defines the observed state of AWSCluster

type AWSClusterStatus struct {

Ready bool `json:"ready"`

// APIEndpoints represents the endpoints to communicate with the control plane.

// +optional

APIEndpoints []APIEndpoint `json:"apiEndpoints,omitempty"`

}

Infrastructure provider cluster status ready

A ProviderCluster‘s status object must define a boolean field named ready and set the value to true only when the infrastructure required to provision a cluster is ready and available.

Infrastructure provider cluster status apiEndpoints

A ProviderCluster‘s status object may optionally define a field named apiEndpoints that is a list of the following objects:

// APIEndpoint represents a reachable Kubernetes API endpoint.

type APIEndpoint struct {

// The hostname on which the API server is serving.

Host string `json:"host"`

// The port on which the API server is serving.

Port int `json:"port"`

}

If present, this field is automatically inspected in order to obtain an endpoint at which the Kubernetes cluster may be accessed.

Create the infrastructure controllers

The actuator model from v1alpha1 has been replaced by the infrastructure controllers in v1alpha2:

The infrastructure provider cluster controller

Instead of processing the CAPI Cluster resources like the old actuator model, the new provider cluster controller operates on the new provider Cluster CRD. However, the overall workflow should feel the same as the old cluster actuator. For example, take the AWSCluster controller’s reconcile function, it:

-

Fetches the

AWSClusterresource:awsCluster := &infrastructurev1alpha2.AWSCluster{} err := r.Get(ctx, request.NamespacedName, awsCluster) if err != nil { if apierrors.IsNotFound(err) { return reconcile.Result{}, nil } return reconcile.Result{}, err } -

Fetches the CAPI cluster resource that has a one-to-one relationship with the

AWSClusterresource:cluster, err := util.GetOwnerCluster(ctx, r.Client, awsCluster.ObjectMeta) if err != nil { return reconcile.Result{}, err } if cluster == nil { logger.Info("Waiting for Cluster Controller to set OwnerRef on AWSCluster") return reconcile.Result{}, nil }If the

AWSClusterresource does not have a corresponding CAPI cluster resource then the reconcile will be reissued once the OwnerRef is assigned to theAWSClusterresource by the CAPI controller, triggering a new reconcile event. -

Uses a

deferstatement to ensure theAWSClusterresource is always patched back to the API server:defer func() { if err := clusterScope.Close(); err != nil && reterr == nil { reterr = err } }() -

Handles both deleted and non-deleted clusters resources:

// Handle deleted clusters if !awsCluster.DeletionTimestamp.IsZero() { return reconcileDelete(clusterScope) } // Handle non-deleted clusters return reconcileNormal(clusterScope)

The infrastructure provider machine controller

The new provider machine controller is a slightly larger departure from the v1alpha1 machine actuator. This is because the machine actuator was based around a Create, Read, Update, Delete (CRUD) model. Providers implementing the v1alpha1 machine actuator would implement each of those four functions. However, this was just an abstract way to represent a Kubernetes controller’s reconcile loop.

The new, v1alpha2, provider machine controller merely takes the same CRUD model from the v1alpha1 machine actuator and applies it to a Kubernetes reconcile activity. The CAPI provider for vSphere (CAPV) actually includes a diagram that illustrates the v1alpha1 machine actuator CRUD operations as a reconcile loop.

Reference

This section contains various resources that define the Cluster API project.

Table of Contents

A | B | C | D | H | I | K | M | N | O | P | S | T | W

A

Add-ons

Services beyond the fundamental components of Kubernetes.

- Core Add-ons: Addons that are required to deploy a Kubernetes-conformant cluster: DNS, kube-proxy, CNI.

- Additional Add-ons: Addons that are not required for a Kubernetes-conformant cluster (e.g. metrics/Heapster, Dashboard).

B

Bootstrap

The process of turning a server into a Kubernetes node. This may involve assembling data to provide when creating the server that backs the Machine, as well as runtime configuration of the software running on that server.

Bootstrap cluster

A temporary cluster that is used to provision a Target Management cluster.

C

CAPI

Core Cluster API

CAPA

Cluster API Provider AWS

CABPK

Cluster API Bootstrap Provider Kubeadm

CAPD

Cluster API Provider Docker

CAPG

Cluster API Google Cloud Provider

CAPO

Cluster API Provider OpenStack

CAPV

Cluster API Provider vSphere

CAPZ

Cluster API Provider Azure

Cluster

A full Kubernetes deployment. See Management Cluster and Workload Cluster

Cluster API

Or Cluster API project

The Cluster API sub-project of the SIG-cluster-lifecycle. It is also used to refer to the software components, APIs, and community that produce them.

Control plane

The set of Kubernetes services that form the basis of a cluster. See also https://kubernetes.io/docs/concepts/#kubernetes-control-plane There are two variants:

- Self-provisioned: A Kubernetes control plane consisting of pods or machines wholly managed by a single Cluster API deployment.

- External: A control plane offered and controlled by some system other than Cluster API (e.g., GKE, AKS, EKS, IKS).

D

Default implementation

A feature implementation offered as part of the Cluster API project, infrastructure providers can swap it out for a different one.

H

Horizontal Scaling

The ability to add more machines based on policy and well defined metrics. For example, add a machine to a cluster when CPU load average > (X) for a period of time (Y).

Host

see Server

I

Infrastructure provider

A source of computational resources (e.g. machines, networking, etc.). Examples for cloud include AWS, Azure, Google, etc.; for bare metal include VMware, MAAS, metal3.io, etc. When there is more than one way to obtain resources from the same infrastructure provider (e.g. EC2 vs. EKS) each way is referred to as a variant.

Instance

see Server

Immutability

A resource that does not mutate. In kubernetes we often state the instance of a running pod is immutable or does not change once it is run. In order to make a change, a new pod is run. In the context of Cluster API we often refer to an running instance of a Machine is considered immutable, from a Cluster API perspective.

K

Kubernetes-conformant

Or Kubernetes-compliant

A cluster that passes the Kubernetes conformance tests.

k/k

Refers to the main Kubernetes git repository or the main Kubernetes project.

M

Machine

Or Machine Resource

The Custom Resource for Kubernetes that represents a request to have a place to run kubelet.

See also: Server

Manage a cluster

Perform create, scale, upgrade, or destroy operations on the cluster.

Management cluster

The cluster where one or more Infrastructure Providers run, and where resources (e.g. Machines) are stored. Typically referred to when you are provisioning multiple clusters.

N

Node pools

A node pool is a group of nodes within a cluster that all have the same configuration.

O

Operating system

Or OS

A generically understood combination of a kernel and system-level userspace interface, such as Linux or Windows, as opposed to a particular distribution.

P

Pivot

Pivot is a process for moving the provider components and declared cluster-api resources from a Source Management cluster to a Target Management cluster.

The pivot process is also used for deleting a management cluster and could also be used during an upgrade of the management cluster.

Provider

Provider components

Refers to the YAML artifact a provider publishes as part of their releases which is required to use the provider components, it usually contains Custom Resource Definitions (CRDs), Deployments (to run the controller manager), RBAC, etc.

Provider implementation

Existing Cluster API implementations consist of generic and infrastructure provider-specific logic. The infrastructure provider-specific logic is currently maintained in infrastructure provider repositories.

S

Scaling

Unless otherwise specified, this refers to horizontal scaling.

Server

The infrastructure that backs a Machine Resource, typically either a cloud instance, virtual machine, or physical host.

T

Target Management cluster

The declared cluster we intend to create and manage using cluster-api when running clusterctl create cluster.

When running clusterctl alpha phases pivot this refers to the cluster that will be the new management cluster.

W

Provider Implementations

The code in this repository is independent of any specific deployment environment. Provider specific code is being developed in separate repositories](some of which are also sponsored by SIG Cluster Lifecycle. Providers marked in bold are known to support v1alpha2 API types.

Bootstrap

Infrastructure

- AWS

- Azure

- Baidu Cloud

- Bare Metal

- DigitalOcean

- Exoscale

- GCP

- IBM Cloud

- OpenStack

- Packet

- Tencent Cloud

- vSphere

API Adopters

Following are the implementations managed by third-parties adopting the standard cluster-api and/or machine-api being developed here.

Using clusterctl to create a cluster from scratch

This document provides an overview of how clusterctl works and explains how one can use clusterctl

to create a Kubernetes cluster from scratch.

What is clusterctl?

clusterctl is a CLI tool to create a Kubernetes cluster. clusterctl is provided by the provider implementations.

It uses Cluster API provider implementations to provision resources needed by the Kubernetes cluster.

Creating a cluster

clusterctl needs 4 YAML files to start with: provider-components.yaml, cluster.yaml, machines.yaml ,

addons.yaml.

provider-components.yamlcontains the Custom Resource Definitions (CRDs) of all the resources that are managed by Cluster API. Some examples of these resources are:Cluster,Machine,MachineSet, etc. For more details about Cluster API resources click here.cluster.yamldefines an object of the resource typeCluster.machines.yamldefines an object of the resource typeMachine. Generally creates the machine that becomes the control-plane.addons.yamlcontains the addons for the provider.

Many providers implementations come with helpful scripts to generate these YAMLS. Provider implementation can be found here.

clusterctl also comes with additional features. For example, clusterctl can also take in an optional

bootstrap-only-components.yaml to provide resources to the bootstrap cluster without also providing them